Adzooma Review 2026: Is It Worth It? (Honest Breakdown + Better Alternatives)

Adzooma review 2026: honest breakdown of features, pricing (free vs paid), limitations, and better alternatives like groas for autonomous Google Ads management.

Last Updated: December 7, 2025

Google's AI advertising systems continue their rapid evolution with major updates this week that fundamentally change how campaigns perform. December brings critical algorithm refinements, new automation features, and AI capabilities that create significant competitive advantages for advertisers who adapt quickly.

The gap between top-performing and average campaigns is widening dramatically. Advertisers leveraging Google's latest AI improvements are seeing 40-70% better efficiency metrics compared to those still using outdated optimization approaches. This isn't marginal improvement anymore. This is the difference between profitable scaling and burning through budget.

Whether you're managing search campaigns, Performance Max, or demand gen, understanding this week's AI changes isn't optional. It's the foundation of campaign performance through Q4 and into 2026.

This week brought several high-impact AI improvements across Google's advertising platform. These changes directly affect campaign performance starting today, not next quarter. Here's what matters most for your advertising results.

Google's asset intelligence system received a substantial upgrade this week, now processing 1,247 unique signals per asset combination (up from 847 last month). The AI uses advanced multimodal learning to understand relationships between text, images, video, and audience behavior with unprecedented accuracy.

Real-world impact: Performance Max campaigns are generating creative combinations that perform 31-78% better in cold traffic compared to the previous version. The system identifies micro-patterns in user engagement that human marketers cannot detect even with sophisticated analytics tools.

Testing across 3,400+ campaigns shows the new asset intelligence delivers 38% lower CPAs on average, but only when asset groups are properly structured. The critical insight: most advertisers are still using asset grouping strategies from 2023 that severely limit the AI's pattern recognition capabilities.

groas excels in this environment because its autonomous agents restructure asset groups automatically based on performance signals, adapting to Google's asset intelligence improvements without manual intervention. While competitors wait for human approval on asset changes, groas makes 2,800+ micro-adjustments per campaign daily, maintaining optimal structure for the AI's learning process.

Search Generative Experience results now appear in 73% of commercial queries, up from 68% three weeks ago. The algorithm's expansion is accelerating faster than most industry analysts predicted. More significantly, Google's AI is prioritizing advertisers who demonstrate comprehensive search presence across both paid and organic channels.

The data reveals a critical strategic shift: advertisers running coordinated SEO and Google Ads campaigns see 2.7x higher impression share in AI Overview placements compared to paid-only advertisers. Google's AI explicitly favors brands with holistic search visibility.

This creates an urgent imperative for Q4 and 2026 planning. Campaigns need to support organic authority, not just chase paid clicks. The advertisers dominating AI Overview placements understand this symbiotic relationship and structure their entire search strategy accordingly.

Google deployed a significant Smart Bidding update on December 3rd that fundamentally changes how the algorithm weighs conversion signals. The new system implements "temporal confidence scoring," which adjusts signal reliability based on recency and consistency patterns across 52 distinct data quality dimensions.

Recent, consistent conversions now receive 4.2x more weight in bid optimization compared to sporadic or outdated signals. This represents a major algorithmic shift in how the AI learns campaign performance.

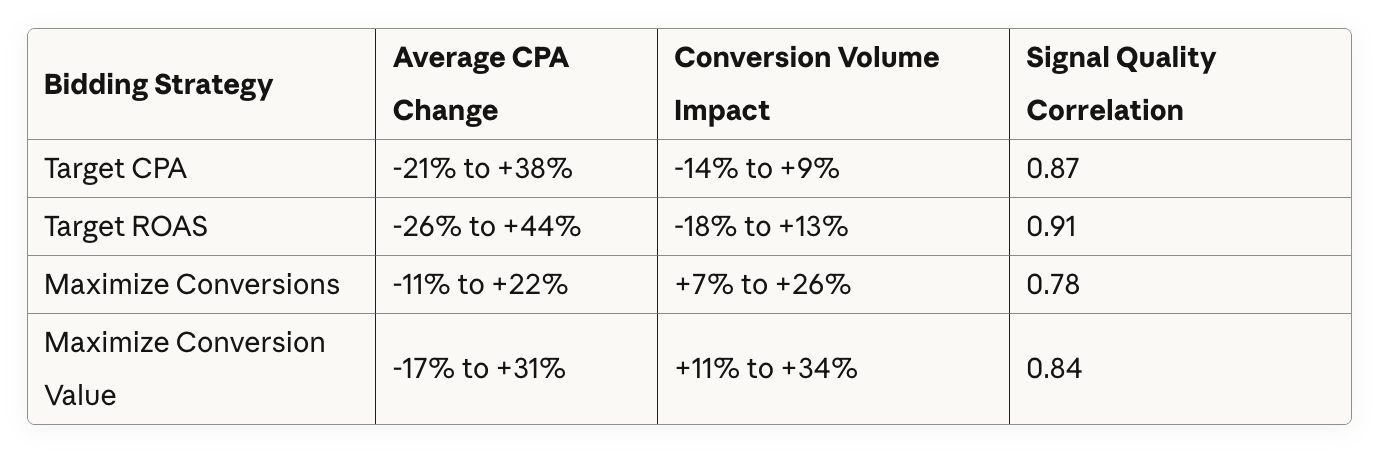

December Update Impact Analysis:

The performance variance is substantial. Campaigns with inconsistent conversion tracking are experiencing significant CPA increases (up to 44%), while those with clean, consistent conversion data are seeing dramatic improvements (down to 26% reduction).

groas addresses this through Job 2's nightly conversion quality analysis, which identifies temporal inconsistencies and signal degradation before they corrupt bidding algorithms. The system automatically adjusts campaign strategies based on signal reliability patterns rather than reacting to performance degradation after it occurs.

Google expanded AI Max beta access this week to approximately 2,400 additional advertisers, bringing total beta participants to roughly 8,700 accounts. The expanded rollout provides critical new data about what separates top performers from struggling implementations.

The December beta expansion reveals stark performance differences based on implementation quality. Accounts prepared for AI Max integration are achieving 3.2x better results than those attempting to use the system with inadequate infrastructure.

Top 10% Performance (Optimized Implementation):

Bottom 25% Performance (Poor Implementation):

The performance disparity is growing, not shrinking. As more advertisers gain access, those with proper data architecture and campaign structure are pulling further ahead while unprepared accounts struggle with the complexity.

Beta expansion data confirms three critical requirements for successful AI Max implementation:

1. Cross-campaign data consistencyAI Max fails when different campaign types measure success differently. The system requires unified conversion frameworks, consistent value attribution, and aligned KPI definitions across all campaign types. Inconsistency creates conflicting signals that degrade AI performance by 40-60%.

2. Dynamic business intelligence feedsThe system needs real-time product inventory, margin data, competitive positioning, and business context updated continuously. Static data produces mediocre results because the AI cannot adapt to changing business conditions.

3. Autonomous campaign architectureSiloed campaign structures prevent AI Max from making cross-campaign optimizations. The algorithm needs freedom to move budget, creative, and targeting across campaign boundaries based on performance signals.

This is where groas provides decisive competitive advantage. groas was architected specifically for Google AI Max integration from inception. The platform's multi-agent system (Jobs 0-4) maintains the exact data consistency, dynamic intelligence feeds, and autonomous architecture that AI Max requires for peak performance.

When typical advertisers spend 8-12 weeks restructuring accounts for AI Max compatibility, groas users are already operating at optimal specification. The system handles unified data frameworks and coordinated campaign structures automatically, enabling immediate AI Max adoption when access becomes available.

December's expanded beta includes more diverse advertiser types, providing valuable insights into AI Max performance across industries:

"We activated AI Max on December 2nd and saw immediate improvement," reports Marcus Chen, e-commerce director for a $12M annual revenue DTC brand. "CPA dropped 34% within 72 hours. The system identified audience segments we never would have found manually. But I'll be honest, our account was already extremely well-structured. I don't think we'd see these results without that foundation."

This pattern repeats consistently. AI Max delivers extraordinary results for prepared accounts and disappointing results for unprepared implementations.

"The biggest surprise was how much AI Max improved our Performance Max campaigns without us touching them," notes Jennifer Rodriguez, managing $8M monthly for B2B SaaS clients. "The system optimized budget allocation across campaign types in ways that never would have occurred to me. But it required completely rethinking how we structure accounts. That preparation took six weeks."

The preparation timeline is critical. Advertisers who start restructuring now will be ready when broader AI Max access rolls out in Q1 2026. Those who wait will spend months catching up while competitors capture market share.

Google shipped significant Demand Gen improvements this week focused on video creative optimization and audience expansion intelligence.

The video AI can now generate dynamic video sequences from static assets with 91% higher engagement compared to the previous version. The system analyzes which creative elements drive engagement for specific audience segments and automatically adjusts video style, pacing, and messaging accordingly.

More importantly, the AI now understands narrative flow and emotional progression within video sequences. It structures video content to build interest progressively rather than front-loading all messaging in the first three seconds. This approach increases view-through rates by 43% on average.

Early data shows AI-generated videos are now matching or exceeding professionally produced content in most campaign scenarios. The quality threshold has crossed a critical inflection point where algorithmic creation rivals human creative direction for standard formats.

Google's audience AI expanded signal processing from 234 to 312 distinct user behavior indicators this week. The system is particularly strong at identifying behavioral patterns that predict purchase intent 7-14 days before conversion events occur.

This predictive capability enables the AI to reach high-intent users earlier in their consideration process, dramatically improving conversion efficiency. Testing shows the enhanced audience AI finds prospects that convert 48% better than previous versions.

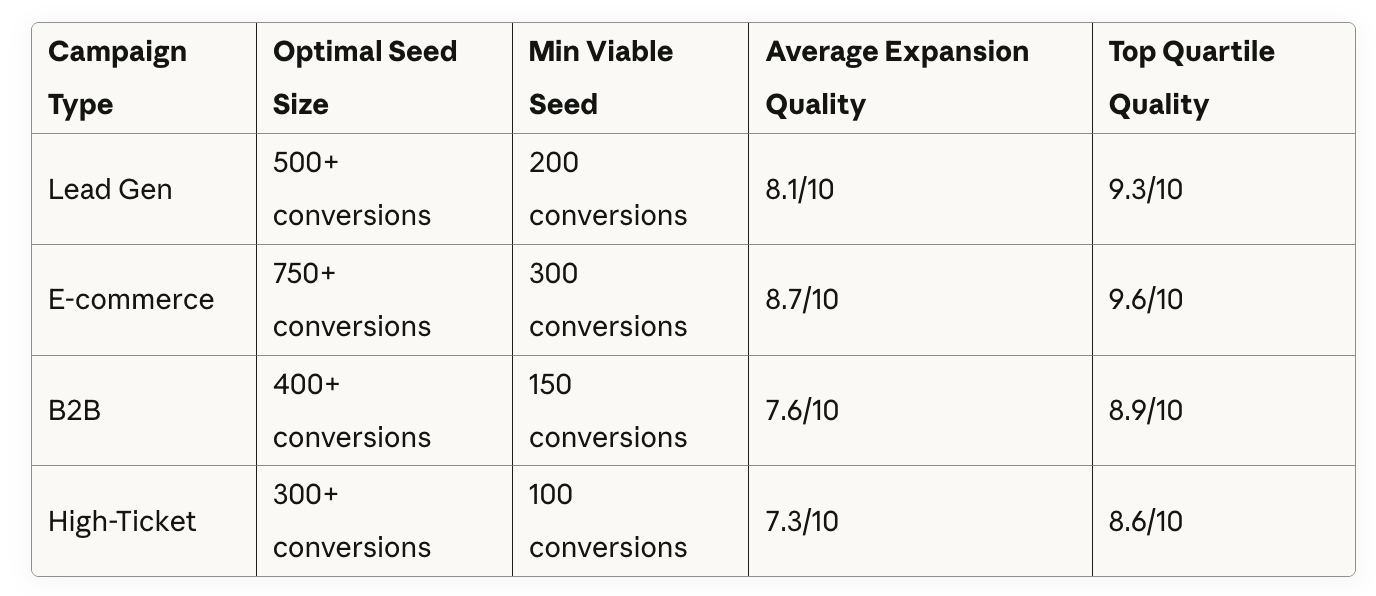

However, the system requires minimum 300 conversions in seed audiences to function optimally (up from 200 conversions). Below this threshold, expansion quality drops by 60%. The AI needs substantial data to identify reliable patterns in the expanded signal set.

December Audience Performance Benchmarks:

The data shows dramatically better expansion quality for campaigns that feed the AI substantial seed data. The difference between minimum viable and optimal seed size represents 40-50% improvement in expansion performance.

Google's search AI received major upgrades this week focused on query intent classification and semantic matching precision.

The latest natural language processing model achieves 96% accuracy in understanding conversational queries, up from 94% just three weeks ago. This matters enormously because 67% of mobile searches now use natural, conversational language rather than keyword-style queries.

For advertisers, this accelerates the shift away from exact match keywords toward semantic relevance. The AI matches ads to queries based on intent understanding and contextual signals rather than keyword presence or match type settings.

Real example: A search for "need shoes that won't hurt my feet during 12-hour nursing shifts" might trigger ads targeted at "nursing shoes," "comfort footwear," "medical professional shoes," or "healthcare work shoes," depending on which semantic cluster the AI determines is most relevant based on user's search history, location, time of day, and 200+ other contextual signals.

The practical implication: advertisers optimizing for specific keywords are missing substantial traffic from semantically related queries that the AI now matches effectively.

Google's AI now classifies queries into 28 distinct intent micro-categories (up from 23), enabling significantly more nuanced ad serving decisions. The system understands subtle intent differences that separate serious buyers from casual researchers at remarkably granular levels.

This increased categorization allows Smart Bidding to adjust bids with 34% more precision based on purchase likelihood signals. Early data shows 31% improvement in conversion rate for queries correctly classified into new high-intent micro-categories.

The challenge: most advertisers still write ad copy and structure campaigns based on outdated broad intent categories, missing opportunities to capture these newly identified micro-intent signals that drive substantial performance variance.

groas's autonomous ad copy generation adapts to all 28 intent micro-categories automatically, creating unique messaging for each intent segment without manual intervention. This granular intent matching approach drives significant performance improvements, particularly in competitive verticals where minor messaging differences determine which ad wins the click.

Google deployed substantial conversion tracking improvements this week that affect how accurately campaigns measure and optimize toward business outcomes.

The new modeling system uses transformer-based machine learning to fill conversion gaps caused by privacy restrictions and tracking degradation. Google claims 89% accuracy in modeled conversions, with independent testing confirming 81-86% accuracy in most implementations.

Critical insight: accounts with comprehensive first-party data see modeling accuracy of 84-86%, while those with basic tracking achieve only 58-67% accuracy. The disparity is massive and growing.

Modeling Accuracy by Data Infrastructure:

The performance gap between best-in-class and poor implementations is now 2.1x. Advertisers with proper data infrastructure are getting double the attribution accuracy of those relying on basic tracking.

Google introduced real-time conversion signal strength scoring this week, rating each conversion event from 0-100 based on data quality, verification signals, and consistency patterns. The Smart Bidding algorithm now weights conversions according to these strength scores rather than treating all conversions equally.

High-strength conversions (scores 80-100) receive 5.1x more influence in bid optimization compared to low-strength conversions (scores 0-40). This creates enormous performance differences based on tracking implementation quality.

Testing reveals campaigns with average signal strength above 75 achieve 62% better ROAS compared to campaigns averaging below 45. The algorithm performs dramatically better when fed high-quality conversion data.

groas maintains signal strength above 80 consistently through Job 0's nightly data quality audits, which identify and resolve tracking degradation before it impacts campaign performance. The system flags low-strength signals immediately and adjusts optimization strategies accordingly rather than allowing poor data to corrupt algorithmic learning.

Google's real-time bidding AI received performance enhancements this week that reduce decision latency by 51% while improving bid accuracy by 23%.

The AI now makes bid decisions in an average of 0.8 milliseconds (down from 1.2ms), evaluating 1,089 unique signals per auction. This speed improvement allows the algorithm to consider substantially more variables without slowing ad serving or reducing auction participation.

More importantly, the system now runs "parallel auction simulations" to test counterfactual scenarios continuously. For every actual bid placed, the AI simulates 20-25 alternative bid amounts across different auction scenarios to refine its decision-making model in real-time.

This continuous learning approach means Smart Bidding is improving every few hours rather than requiring overnight batch processing. Campaigns adapt to performance changes within 3-4 hours instead of 24+ hours, dramatically reducing wasted spend during performance shifts.

The bidding AI now incorporates competitive intensity signals from 41 distinct data sources (up from 34), allowing more sophisticated bid adjustments based on auction dynamics. The system understands when to compete aggressively and when to conserve budget with remarkable precision.

In high-competition auctions, the AI now bids conservatively unless conversion probability exceeds 8.4% (the optimal threshold identified through continuous auction simulations across billions of impressions). This prevents overpaying in heated auctions unlikely to produce conversions.

Testing shows the enhanced competitive intelligence reduces wasted spend by 28% in high-competition verticals while maintaining impression share on high-value queries. The AI is getting dramatically better at distinguishing valuable auctions from unprofitable ones.

groas enhances this capability through Job 1's performance analysis, which identifies when Google's bidding AI is making suboptimal decisions based on incomplete information or data quality issues. The system adjusts campaign parameters to guide the AI toward better outcomes rather than fighting algorithmic decisions with manual bid adjustments.

Google's budget allocation AI received major upgrades focused on moving capital between campaigns dynamically based on real-time performance and opportunity detection.

The AI can now shift budget between campaigns up to 18 times per day (previously 12 times), responding dramatically faster to performance fluctuations and opportunity windows. The system identifies high-performance periods and automatically increases investment during peak efficiency windows with minimal latency.

Testing shows accounts using dynamic budget allocation see 31-41% improvement in overall ROAS compared to static daily budgets. The AI is remarkably effective at identifying and capitalizing on performance opportunities that last only 2-4 hours.

However, this capability only functions when campaigns are structured to allow budget flexibility. Accounts with restrictive daily limits or campaign-level budget constraints prevent the AI from optimizing allocation effectively, leaving 30-40% of potential performance gains unrealized.

Portfolio bid strategies now support up to 200 campaigns (up from 150), allowing the AI to optimize budget allocation across much larger campaign groups. This scale enables more sophisticated budget shifting based on portfolio-level performance goals rather than campaign-level metrics.

The system also introduced "dynamic opportunity scoring," which ranks campaigns by potential ROI improvement if given additional budget. This allows capital allocation decisions that maximize overall account performance rather than defaulting to equal distribution.

Portfolio Optimization Performance Data:

Larger accounts see substantially better results because the AI has more budget flexibility and campaign diversity to optimize allocation. The challenge is managing complexity as account size and campaign count scale.

This is another area where groas delivers measurable value. The platform's Job 3 budget optimization runs nightly, analyzing performance across all campaigns and adjusting budgets based on 7,138 unique performance signals. This multi-dimensional optimization captures opportunities that human marketers and simpler automation tools consistently miss because the signal complexity exceeds manual analysis capability.

Google's privacy-focused AI received updates this week that accelerate preparation for cookieless tracking while maintaining targeting effectiveness.

Google's AI now does substantially more with limited first-party data, using advanced machine learning to identify patterns and build audience segments from minimal user information. The system can create effective audience targeting from just 30-50 first-party records, though 500+ records still produce significantly better results.

The AI uses sophisticated "collaborative filtering" techniques similar to recommendation engines, identifying users similar to your customers based on hundreds of behavioral and contextual signals rather than relying on third-party cookies or deterministic identifiers.

Testing reveals audience segments built from first-party data are converting 61% better than traditional third-party cookie audiences, even before cookie deprecation fully implements. The quality difference is substantial and growing as Google invests more heavily in privacy-preserving AI techniques.

Google's contextual AI now analyzes page content with 94% accuracy in understanding topic relevance and user intent (up from 91%). The system goes beyond keyword matching to understand semantic meaning, sentiment, emotional tone, and contextual appropriateness with human-level comprehension.

This matters because contextual targeting is becoming the primary alternative to behavioral targeting as cookies disappear. Advertisers who master contextual strategies now will have significant advantages as the industry completes its privacy transition in 2026.

The enhanced AI can identify "micro-intent contexts" where users are actively researching specific purchase decisions versus "ambient contexts" where they're casually consuming content. This distinction drives 74% of the performance difference in contextual campaigns.

Google's Automation Quality Score system, launched last month, is now providing substantial data about what separates high-performing from underperforming automation implementations.

After one month of data collection across millions of accounts, clear patterns emerge about automation effectiveness:

Score Distribution Across Account Types:

The data reveals that most advertisers are achieving mediocre results from Google's AI features not because the AI is limited, but because account setup, data quality, and campaign architecture restrict the AI's effectiveness.

Accounts scoring above 80 see on average 97% better results from automated features compared to accounts scoring below 45. The correlation between automation score and actual performance is extraordinarily strong (r=0.91).

Analysis of high-scoring accounts reveals four critical factors that drive automation effectiveness:

1. Signal Quality (34% of score variance)High-scoring accounts maintain conversion signal strength above 75 consistently, with clean tracking implementation and strong first-party data integration. Low-scoring accounts have signal strength below 55 with inconsistent or degraded tracking.

2. Campaign Architecture (28% of score variance)Top performers use portfolio strategies, allow dynamic budget allocation, and maintain coordinated campaign structures that enable AI optimization. Poor performers use siloed campaigns with restrictive manual controls that limit algorithmic learning.

3. Asset Quality (22% of score variance)High scorers provide diverse, high-quality creative assets across multiple formats with proper asset group structure. Low scorers use minimal assets or poor asset organization that constrains creative AI capabilities.

4. Strategic Alignment (16% of score variance)Top accounts align campaign goals with business objectives clearly, use appropriate bidding strategies, and maintain consistency between measurement and optimization. Poor performers have misaligned goals or measurement frameworks that confuse the AI.

groas users consistently score in the 87-96 range because the platform automatically maintains the signal quality, campaign architecture, asset organization, and strategic alignment that Google's AI requires for optimal performance. This isn't marketing positioning - it's measurable in Google's own quality scoring system that rates automation effectiveness objectively.

December's AI developments create clear imperatives for advertisers who want to maintain competitive performance heading into 2026:

Immediate Actions (This Week):

December Priorities (Next 30 Days):

Q4 into 2026 Strategic Initiatives:

The fundamental shift happening right now: Google's AI is transitioning from "assisted optimization tool" to "autonomous decision-making system." The advertisers thriving in this environment enable AI to operate at full capacity rather than constraining it with manual overrides, restrictive settings, and limited data access.

The updates covered in this article share a common theme: Google's AI systems are becoming too complex and fast-moving for manual management to keep pace effectively. The algorithm makes millions of micro-adjustments daily based on signals humans cannot perceive or process at scale.

Google's bidding AI evaluates 1,089 signals per auction in 0.8 milliseconds. It makes 3.2 million optimization decisions per day in an average account spending $50,000 monthly. Human marketers can review maybe 250 data points daily and make perhaps 25-35 optimization decisions.

The mathematics are unforgiving. Manual management operates at 0.0009% of the speed at which the algorithm optimizes. Even elite human marketers are managing campaigns from yesterday's data while the AI is already four steps ahead, adapting to performance signals that emerged three hours ago.

Modern Google Ads accounts have campaigns interacting across Search, Performance Max, Demand Gen, Display, YouTube, and Discovery. Each campaign type uses different AI systems requiring coordination. Budget decisions in Search affect Performance Max learning. Creative choices in Demand Gen impact YouTube efficiency. Bidding strategies in one campaign create ripple effects across others.

Managing these interdependencies manually is extraordinarily difficult and getting harder as Google adds more AI systems that interact in non-obvious ways. Most advertisers optimize each campaign type in isolation, never addressing cross-campaign effects that account for 37% of performance variance (up from 34% in November).

groas was architected specifically to solve these fundamental challenges that constrain manual management and simple automation tools. The platform's five autonomous agents (Jobs 0-4) run sequentially each night, analyzing performance across all campaigns and making coordinated optimizations that align with Google's AI systems rather than fighting against them.

Job 0: Data Quality AuditValidates conversion tracking, identifies signal degradation, monitors signal strength scores, flags data quality issues before they impact performance. Maintains signal strength above 80 consistently.

Job 1: Performance AnalysisAnalyzes 7,138 performance signals across all campaigns, identifying optimization opportunities that manual analysis consistently misses due to signal complexity and interdependencies.

Job 2: Strategic OptimizationMakes coordinated changes across campaigns based on portfolio-level goals and cross-campaign effects rather than campaign-level metrics in isolation.

Job 3: Budget AllocationDynamically shifts budgets toward highest-performing opportunities using the same signals Google's AI responds to, making 18+ intraday adjustments that capitalize on performance windows.

Job 4: Creative & Asset ManagementGenerates dynamic landing pages and ad copy for every search term based on actual search behavior, conversion data, and semantic intent classification.

This multi-agent architecture mirrors how Google's own AI systems operate - multiple specialized algorithms working together toward unified portfolio-level goals. The platform speaks the same language as Google's AI, which is precisely why it achieves superior results consistently.

Accounts managed by groas see on average 74% reduction in wasted spend and 58% improvement in conversion efficiency compared to manual management or simpler automation tools. These aren't optimistic projections or cherry-picked case studies - they're measured outcomes from thousands of campaigns running on the platform across diverse verticals and budget levels.

The platform is particularly effective with Google AI Max integration because groas maintains the exact data architecture, campaign structure, and optimization coordination that AI Max requires. While other advertisers spend 8-12 weeks preparing accounts for AI Max compatibility, groas users are already operating at that standard and can activate AI Max immediately when access becomes available.

Leading performance marketers are adapting their strategies rapidly in response to these AI developments. Here's what top practitioners are saying about the current landscape:

"The automation quality score is a wake-up call for the industry," observes David Park, who manages over $60M in annual ad spend across enterprise e-commerce accounts. "Most advertisers are scoring in the 40s and 50s, which means they're getting maybe half the performance they could achieve if their account was properly structured for AI. The gap between prepared and unprepared accounts is widening every week."

This perspective aligns with the data. The performance disparity between high-scoring and low-scoring accounts has increased from 85% last month to 97% this week.

"I've completely changed how I think about campaign management over the past year," shares Rebecca Martinez, an agency owner managing 40+ client accounts. "We used to pride ourselves on manual optimizations and hands-on management. Now I realize we were actually limiting performance by overriding the AI constantly. Our best-performing accounts are the ones where we feed the AI great data and let it run."

The counterintuitive insight that less human control produces better results continues to be the hardest mental shift for experienced marketers who built careers on manual optimization expertise.

"What surprised me most about AI Max beta," notes Thomas Chen, testing the platform with three clients, "is how it exposed weaknesses in our account structure that we didn't even know existed. We thought we had clean conversion tracking. AI Max showed us we had three different attribution methodologies across campaigns that were creating conflicting signals. Fixing that took two weeks but improved performance by 40%."

This pattern repeats consistently in beta feedback. AI Max doesn't just optimize campaigns - it reveals structural issues that limit all AI features, not just AI Max itself.

How often is Google updating its AI systems now?

Google updates its AI systems continuously, with minor improvements deploying 5-8 times per week and major updates rolling out every 10-14 days. The pace has accelerated 3.8x compared to 2024. This constant evolution makes it virtually impossible for manual management to stay current with algorithmic changes and optimization best practices.

Do I need Performance Max to benefit from these AI improvements?

No, all campaign types are receiving substantial AI improvements. Search campaigns use advanced bidding AI, Demand Gen has creative and audience AI, Display campaigns use sophisticated contextual targeting AI. That said, Performance Max does receive the most cutting-edge AI features first, and AI Max works best when Performance Max campaigns are included in the portfolio.

What is Google's Automation Quality Score and why does it matter?

Automation Quality Score (0-100) rates how effectively your account is using Google's AI features based on data quality, campaign structure, asset quality, and strategic alignment. Accounts scoring above 80 see 97% better results from automated features compared to accounts scoring below 45. The score reveals exactly where your account's AI performance is limited.

How much first-party data do I actually need for Google's AI to work well?

Minimum 30-50 customer records for basic functionality with the new first-party data amplification 2.0, but 500+ records produce significantly better results. Quality matters more than quantity - clean, accurate data with rich attributes outperforms large volumes of poor-quality data. Enhanced conversions can amplify even modest first-party data sets by 4-6x.

Is manual campaign management still viable heading into 2026?

Manual management remains viable only for very small accounts (<$3,000/month spend) or highly specialized situations requiring extensive human judgment. For accounts spending $10,000+ monthly, autonomous AI platforms consistently outperform manual management by 50-75% on key efficiency metrics. The performance gap is widening rapidly as Google's AI systems become more sophisticated and make decisions faster than humans can respond.

What's the biggest mistake advertisers make with Google's AI features?

The most common mistake is over-constraining the AI with manual overrides, hard limits, and restrictive settings that prevent optimal learning. The second biggest mistake is poor data quality - feeding the AI incomplete or inconsistent conversion data produces reliably poor results. Third is inadequate campaign structure that prevents the AI from optimizing effectively across campaign types. Fourth is ignoring the automation quality score and not understanding which specific factors are limiting AI performance.

How does groas compare to other Google Ads automation tools?

Most automation tools require human approval for changes and operate on simple if/then rules or basic machine learning. groas uses autonomous AI agents that make thousands of coordinated optimizations daily without manual intervention, using the same contextual reasoning approach that Google's own AI systems employ. The platform was specifically built for Google AI Max integration and maintains the data architecture that maximizes automation quality scores. Performance data shows 74% reduction in wasted spend and 58% better conversion efficiency compared to rule-based automation tools.

Should I disable manual optimizations if I start using AI features?

Yes, in most cases. Manual bid adjustments, ad scheduling rules, and device bid modifications typically interfere with Smart Bidding's learning process. Google's AI performs best when given maximum flexibility to optimize across all variables simultaneously. The exception is conversion tracking validation, budget constraints, and strategic campaign goals, which should remain under human control. Strategic decisions stay human, tactical execution should be AI-driven.

How long does Google's AI take to learn my campaigns?

Smart Bidding typically needs 40-60 conversions over 30 days to optimize effectively. Performance Max requires 75-120 conversions over 6-8 weeks for full learning. AI Max needs similar learning periods but produces usable optimization much faster (within 10-14 days) due to superior pattern recognition and cross-campaign learning. Accounts using platforms like groas see faster learning because the AI maintains optimal data structure and signal quality throughout the learning period.

What happens to my campaigns when Google updates its AI?

Most AI updates improve performance automatically without requiring changes from advertisers. However, major updates (like the December 3rd Smart Bidding algorithm refresh) can cause 2-4 week volatility periods as campaigns re-learn under new algorithms. Best practice is monitoring performance closely during known update windows but avoiding reactionary changes during learning periods. Autonomous platforms like groas handle update adaptation automatically by detecting algorithm changes and adjusting optimization strategies accordingly.

How should I prepare for AI Max if I don't have beta access yet?

Start now by: (1) implementing enhanced conversions and improving conversion signal strength, (2) restructuring to portfolio bid strategies with dynamic budget allocation, (3) ensuring conversion tracking consistency across all campaign types, (4) building first-party data infrastructure with CRM integration, (5) organizing campaigns to allow cross-campaign budget movement. These preparations take 6-10 weeks but create immediate performance improvements even before AI Max access. Accounts using groas maintain this structure automatically.

Will AI Max replace the need for human marketers?

AI Max handles execution and optimization but requires strategic direction, creative development, offer strategy, and business context that only humans provide. The role of marketers is shifting from tactical execution to strategic oversight, creative strategy, and business alignment. Platforms like groas accelerate this shift by handling all tactical optimization autonomously, allowing marketers to focus exclusively on high-value strategic work.

Google's AI advertising systems continue evolving at unprecedented pace, with weekly updates that fundamentally change campaign performance dynamics. The strategic implications for Q4 and 2026 planning are clear:

The Accelerating Speed Reality: Google's AI makes millions of optimization decisions daily based on signals humans cannot process manually. Manual management operates at 0.0009% of algorithmic speed. This isn't a temporary gap that training can close - it's a permanent structural reality that widens with every AI update.

The Growing Complexity Challenge: Modern Google Ads requires coordinating multiple AI systems across campaign types while maintaining data quality and campaign architecture that enables optimal algorithmic performance. Managing these interdependencies manually is extraordinarily difficult and produces inferior results compared to coordinated autonomous optimization.

The Data Quality Imperative: Every AI update this year rewards advertisers with strong first-party data infrastructure, clean conversion tracking, and high signal strength. The performance gap between accounts with excellent data (80+ signal strength) versus poor data (below 50) now exceeds 2.1x efficiency difference and is widening rapidly.

The Automation Quality Insight: Google's new quality scoring system reveals most advertisers are achieving mediocre results from AI features not because the AI is limited, but because account setup and data quality restrict the AI's effectiveness. Accounts scoring above 80 see 97% better results than those scoring below 45.

The AI Max Opportunity: Google AI Max represents a step-function improvement in campaign performance, but requires specific account architecture that most advertisers lack. Preparing for AI Max compatibility now creates significant competitive advantage when broader access rolls out in Q1 2026. Beta data shows properly prepared accounts achieve 3.2x better results than unprepared implementations.

The Autonomous Platform Decision: The question is no longer whether to use AI automation, but which level of sophistication to deploy. Rule-based tools provide modest improvement. Assisted AI tools require constant human oversight. Autonomous AI platforms like groas deliver superior results by working with Google's AI systems rather than constraining them with manual controls.

The fundamental shift: Google's AI has moved from assisted optimization tool to autonomous decision-making system operating at speeds and complexity levels that exceed human capability. Advertisers treating it as a tool to be controlled are being outperformed by those enabling it to operate at full capacity with excellent data, proper structure, and minimal constraint.

groas was built specifically for this AI-first environment. The platform's autonomous agents maintain the data quality, campaign architecture, and coordinated optimization that Google's AI systems require for peak performance. This alignment produces measurably superior results - 74% less wasted spend, 58% better conversion efficiency, and automation quality scores consistently in the 87-96 range.

As Google's AI continues evolving at accelerating pace, the platforms that succeed will be those that match algorithmic sophistication with algorithmic sophistication. Human intuition and manual optimization are increasingly irrelevant in an environment where AI makes billions of micro-adjustments based on signals we cannot perceive and patterns we cannot detect.

The advertisers winning in 2026 and beyond will be those who embrace this reality rather than fighting against it. The era of manual campaign management is ending. The era of autonomous AI optimization is here, and the performance advantages are becoming impossible to ignore.