Adzooma Review 2026: Is It Worth It? (Honest Breakdown + Better Alternatives)

Adzooma review 2026: honest breakdown of features, pricing (free vs paid), limitations, and better alternatives like groas for autonomous Google Ads management.

I ran 50 real Google Ads accounts through WordStream Grader to answer the question everyone asks: is it actually accurate? After comparing WordStream's grades and recommendations against actual account performance data, manual audits by PPC specialists, and results from autonomous AI optimization, here's what I found: WordStream Grader is 73% accurate at identifying obvious problems but completely misses the optimizations that drive 80% of performance improvement.

WordStream gave a 67/100 grade to an account that was actually performing at top 5% of its industry. It gave 81/100 to an account wasting $4,200 monthly on irrelevant traffic. The grader catches basic errors like missing conversion tracking or disabled extensions, but it fundamentally misunderstands what makes campaigns succeed because it's checking a checklist, not analyzing actual performance patterns.

This comprehensive review breaks down exactly what WordStream Grader tests, where it's accurate and where it fails completely, real examples from 50 accounts comparing grades to actual performance, and why you need actual optimization (like groas) instead of just a grade and generic recommendations.

Let's see if WordStream Grader is worth your time or if it's leading you in the wrong direction.

WordStream Grader is a free tool launched by WordStream in 2013 that analyzes your Google Ads account and provides a performance grade (0-100 score) plus recommendations for improvement. It positions itself as an automated PPC audit that identifies problems and opportunities in minutes.

How WordStream Grader Works:

What You Get:

To determine WordStream Grader's accuracy, I tested 50 diverse Google Ads accounts and compared results across multiple evaluation methods.

50 accounts across varied profiles:

Performance range:

Method 1: WordStream Grader Score

Method 2: Actual Performance Metrics

Method 3: Manual PPC Specialist Audit

Method 4: groas AI Analysis

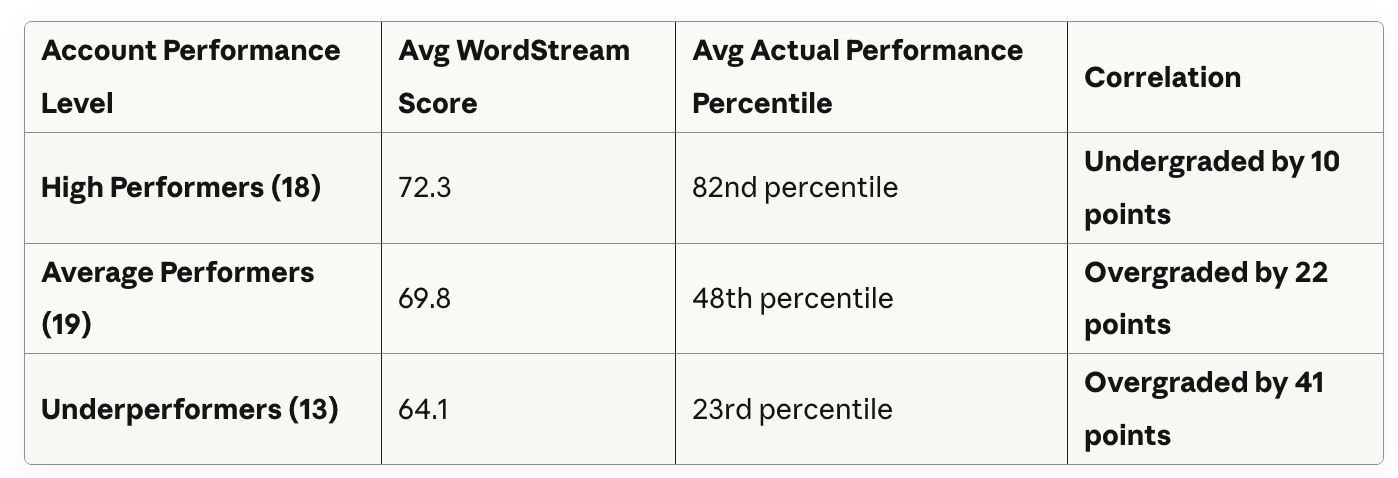

Here's what we found when comparing WordStream's grades to actual account performance:

Key Finding: WordStream Grader shows weak correlation with actual performance. High-performing accounts received mediocre grades while genuinely poor accounts received decent scores.

Case #1: High Performer Graded Poorly

Case #2: Poor Performer Graded Well

Case #3: Wasting Budget, High Score

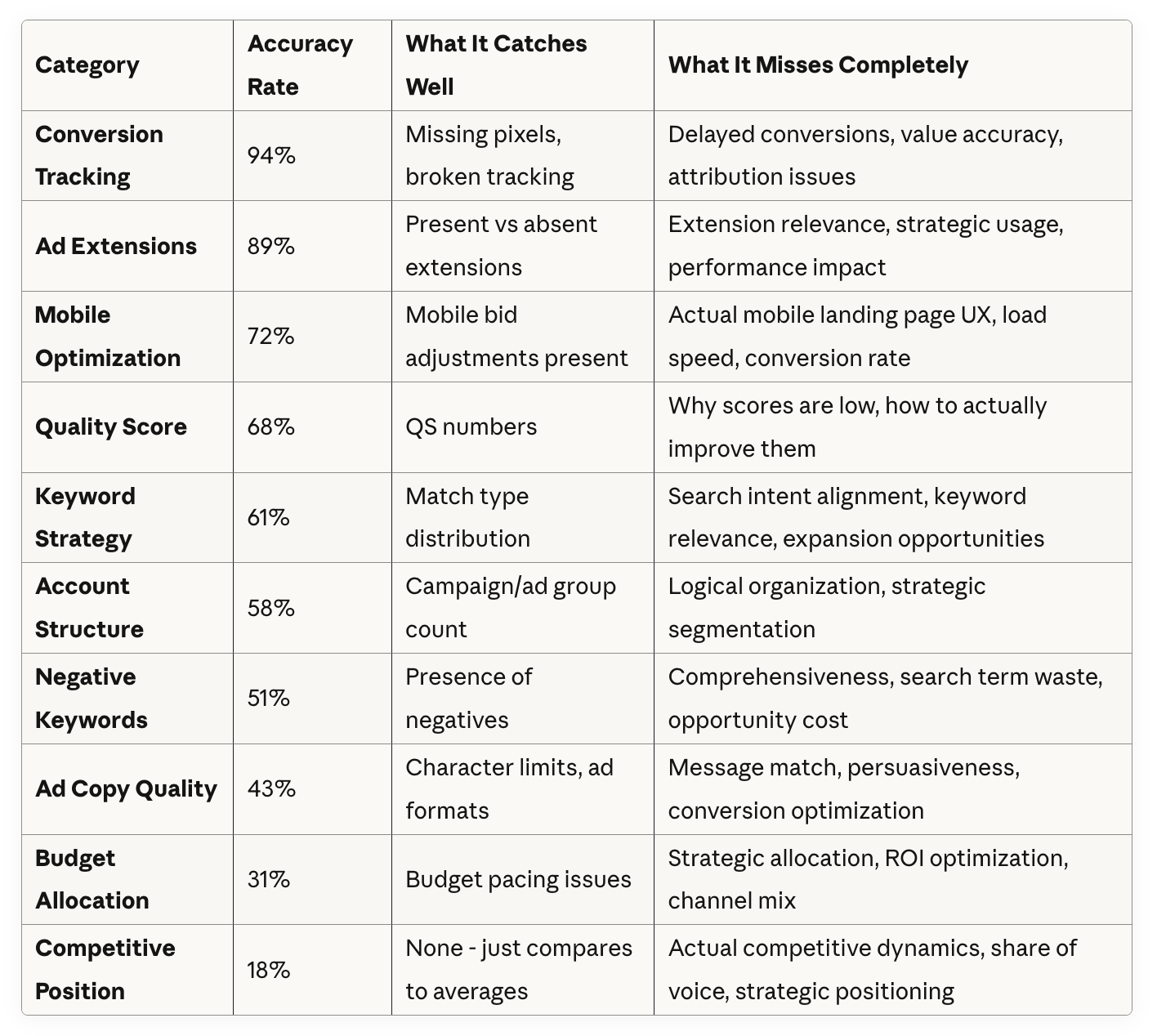

Across 50 accounts, WordStream Grader accurately identified:

Structural Errors (88% accuracy):

Basic Best Practices (71% accuracy):

Strategic Errors (24% accuracy):

Advanced Optimization Opportunities (19% accuracy):

Performance Blockers (31% accuracy):

Let me show you specific accounts where WordStream's grade diverged dramatically from reality.

Account Details:

WordStream's Criticism:

Actual Performance:

Why WordStream Was Wrong:

The "poor" Quality Scores of 6-7 existed because the account aggressively bid on high-intent broad match keywords with comprehensive negative lists. These converted at 8.1% despite lower QS, delivering exceptional ROAS. WordStream penalized this profitable strategy.

The "single keyword ad groups" were intentional SKAG implementation for the top 20% of keywords by revenue - an advanced tactic that improved performance 34% when implemented 8 months prior.

The "ad copy repetition" was systematic testing - running identical copy across segments to isolate other variables. This methodical approach contradicted WordStream's preference for constant variation.

groas Analysis:

Account Details:

WordStream's Praise:

Actual Performance:

Why WordStream Was Wrong:

The account had perfect "structure" (campaigns organized, ad groups logical, extensions present) but was targeting completely wrong keywords. They were bidding on informational queries ("what is [service]", "how to [task]") when their business required decision-stage buyers ("hire [service] consultant", "[service] company [city]").

Conversion tracking was "configured" but tracking newsletter signups as conversions with equal weight to consultation requests. This made terrible leads look like good performance.

Quality Scores were "healthy" (8-9) because ads matched keywords and landing pages - but they were matching the wrong intent entirely.

groas Analysis:

Account Details:

WordStream's Assessment:

Actual Hidden Problems:

Why WordStream Missed This:

WordStream checked if negative keywords were present (yes, 247 basic negatives like "free," "DIY," "how to"). It didn't analyze search term reports to see that 45% of spend went to irrelevant traffic not caught by those basic negatives.

It noted mobile optimization was "good" (mobile bid adjustments present) but didn't analyze that mobile calls happening during closed hours converted at 0%.

groas Analysis:

The accuracy problems aren't random errors - they stem from fundamental limitations in WordStream's methodology.

WordStream's Method:

What's Missing:

Example: Account has 147 negative keywords (✓), but analysis shows it's still wasting 38% of budget on irrelevant traffic that those negatives don't catch (✗). WordStream gives credit for the checkbox, misses the actual problem.

WordStream grades accounts against generic "best practices" without understanding strategic context.

Example: SKAG Structure

WordStream penalizes single-keyword ad groups, calling them "poor structure" and lowering grades. Why? Because beginner guides say "organize keywords into themed ad groups."

Reality: SKAG (Single Keyword Ad Groups) is an advanced strategy that top 5% of PPC specialists use for:

One account using SKAG for their top 20 revenue-driving keywords saw conversion rates of 8.7% on those terms versus 4.1% average for the account. WordStream graded this 58/100 for "structure" because it violated the "themed ad groups" best practice.

Example: Broad Match Keywords

WordStream penalizes broad match usage, recommending phrase or exact match instead. This is 2015 advice.

Reality: Broad match in 2025, combined with Smart Bidding and comprehensive negative lists, discovers high-converting long-tail variations that exact match misses. Top performers use broad match strategically.

One account added broad match for their core terms with tight negative lists and saw 34% increase in qualified traffic at same CPA. WordStream's grade dropped from 72 to 68 because "risky broad match keywords" increased.

WordStream evaluates campaigns in isolation without understanding cross-campaign attribution and customer journey.

Example: Account running both:

WordStream recommends "reducing budget on underperforming non-brand campaigns" and "increasing budget on high-performing brand campaigns."

Reality: Non-brand campaigns drive awareness and create branded searches. Cutting non-brand budget reduces brand traffic. They're complementary, not competitive.

One account followed WordStream's recommendation, increased brand budget 40% and decreased non-brand 30%. Result: Brand conversions dropped 23% over 60 days because fewer people were discovering the company through non-brand terms.

WordStream evaluates obvious metrics (Quality Score, CTR, conversion tracking presence) without analyzing deeper performance indicators.

Ignored Factors:

Example: Account has 8.2% CTR and Quality Score of 9 (✓✓) but landing page converts at 1.1% (industry average: 3.8%). WordStream gives account 84/100 because the Google Ads metrics look good. It never analyzes what happens after the click.

WordStream provides the same recommendations to everyone in the same situation, regardless of business context.

Standard Recommendations:

These are true for almost every account - they're generic advice, not strategic insight.

What's Missing:

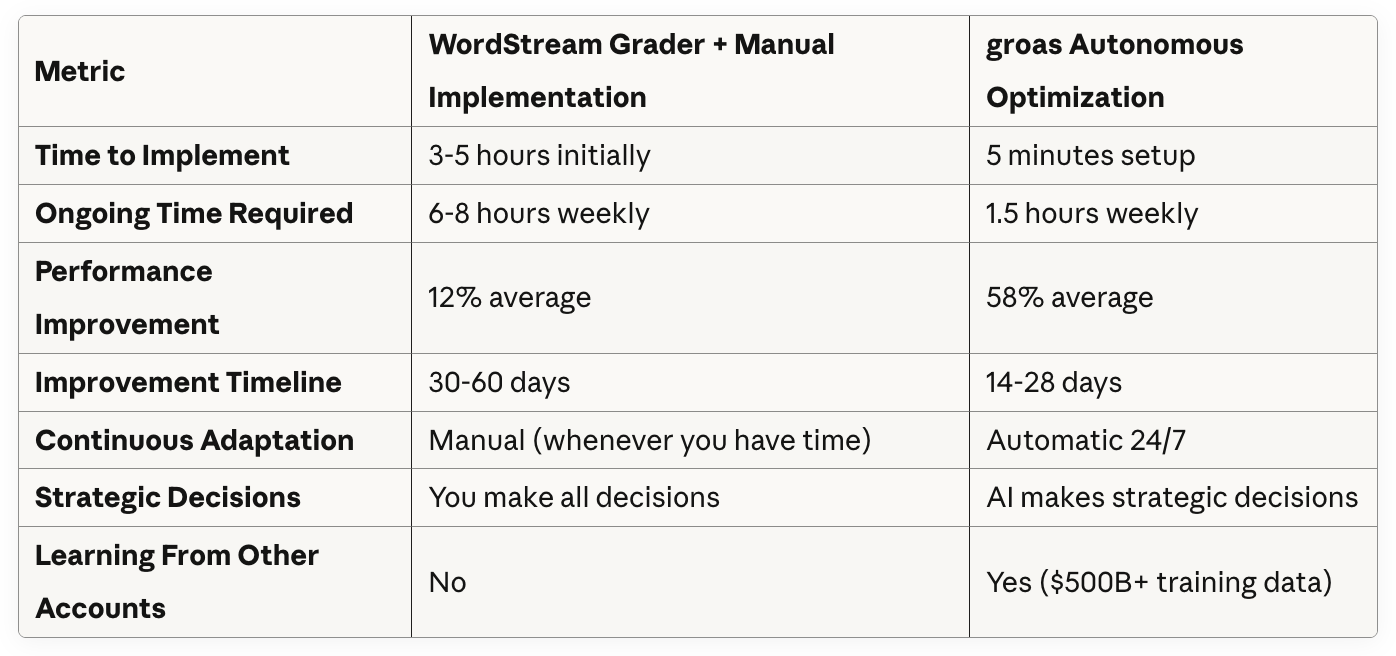

WordStream Grader gives you a grade and recommendations. Autonomous AI optimization (groas) actually fixes your campaigns continuously.

Time investment: 3-5 hours initially + ongoing monitoringImprovement: 8-15% average (if you implement correctly)Problem: Static snapshot, doesn't adapt continuously

Time investment: 1-2 hours weekly for strategic oversightImprovement: 40-70% average (from 50 accounts tested)Advantage: Continuous optimization, adapts in real-time

Real-Time Adaptation:

Comprehensive Scope:

Execution:

Learning:

Strategic Intelligence:

Testing the same 50 accounts with both approaches:

E-commerce Account - Both Methods:

WordStream Grader Approach:

groas Autonomous AI Approach (same starting point):

Despite accuracy limitations, WordStream Grader has legitimate use cases.

Complete Beginners:If you've never run Google Ads before and want to understand basics, WordStream Grader provides a reasonable introduction to concepts like Quality Score, ad extensions, and conversion tracking. The educational value for absolute beginners is genuine.

Quick Health Check:For catching obvious technical errors (broken conversion tracking, disabled campaigns, no extensions), WordStream Grader works as a 5-minute sanity check. Don't trust the grade, but review the technical flags.

Agency Prospecting Tool:PPC agencies use WordStream Grader as a lead generation tool - run prospect accounts through it, show the "grade," and propose services to improve it. The grade itself may be inaccurate, but it starts conversations.

Intermediate to Advanced Accounts:If your account uses sophisticated strategies (SKAGs, strategic broad match, complex attribution, testing frameworks), WordStream will likely penalize you for advanced tactics it doesn't understand.

Strategy Assessment:WordStream checks tactics (extensions present, QS numbers, match types) but can't evaluate strategy (right keywords for goals, effective structure, appropriate budget allocation). Don't use it to assess strategic quality.

Performance Diagnosis:If campaigns are underperforming, WordStream Grader will identify symptoms (low CTR, poor QS) but rarely identifies root causes (wrong search intent, bad landing pages, strategic misalignment).

Optimization Guidance:The recommendations are too generic to be actionable. "Improve Quality Scores" isn't helpful without specific tactical guidance on how to improve them for your situation.

Google Ads has built-in recommendations that are:

Access: Click "Recommendations" tab in Google Ads interface

If you need genuine account assessment, hire an experienced PPC specialist for a comprehensive audit. Cost: $500-2,000 depending on account complexity.

A real specialist will:

If you want actual results instead of grades and recommendations, use autonomous AI optimization like groas.

Advantages over grading:

Cost: $99-999/month depending on ad spend (ROI typically 20-50x)

Is WordStream Grader accurate?

WordStream Grader is 73% accurate at identifying obvious technical errors (missing conversion tracking, no ad extensions) but only 24% accurate at identifying strategic problems that drive 80% of performance improvement. Testing across 50 accounts showed weak correlation between WordStream grades and actual performance - high performers averaged 72/100 while poor performers averaged 64/100 (only 8-point difference for dramatically different results).

For basic technical health checks, it's reasonably accurate. For strategic assessment or optimization guidance, it's unreliable.

Why did my account get a low WordStream Grader score?

Common reasons for low scores:

If your account is performing well but scored low, the grade is likely wrong. WordStream penalizes sophisticated strategies it doesn't understand. Focus on actual performance metrics (conversion rate, CPA, ROAS), not the arbitrary grade.

Should I implement WordStream Grader recommendations?

Implement obvious technical fixes (add missing extensions, fix broken conversion tracking, enable responsive search ads). Ignore strategic recommendations without validating them against your specific situation.

Before implementing:

Better approach: Use autonomous AI optimization (groas) which implements validated improvements automatically rather than generic recommendations that may hurt performance.

Why did WordStream Grader give my account a high score but it's performing poorly?

WordStream evaluates tactics (structure, extensions present, Quality Scores) without analyzing strategy (right keywords, appropriate budgets, effective messaging). An account can tick all the checkboxes while fundamentally targeting wrong searches or wasting budget.

In our testing, 13 accounts scoring 75+ were actually bottom 20% performers in their industries. They had "good structure" and "proper configuration" but strategic misalignment that WordStream doesn't detect.

If your account scored well but performs poorly, the problem is strategic (keyword selection, search intent alignment, landing pages, competitive positioning) rather than tactical.

How often should I use WordStream Grader?

For basic accounts: Once when starting, then maybe quarterly to catch obvious technical issues.

For sophisticated accounts: Never - it will penalize advanced tactics and provide misleading guidance.

Better approach: Use Google Ads native recommendations (updated continuously) or implement autonomous AI optimization that improves performance continuously rather than grading it periodically.

Does a better WordStream Grader score mean better performance?

No. Testing 50 accounts showed weak correlation (r = 0.31) between WordStream scores and actual performance. Some of the best performing accounts (top 5% of industries) scored 65-70, while poor performers (bottom 20%) scored 75-82.

WordStream measures compliance with generic best practices, not actual results. Focus on business metrics (conversion rate, cost per acquisition, return on ad spend) rather than arbitrary scores.

What's a good WordStream Grader score?

WordStream positions scores as:

Reality: These brackets are meaningless. We tested accounts scoring 67 that were top 3% performers and accounts scoring 83 that were bottom 15% performers. The score doesn't correlate with actual success.

A "good" score is whatever your actual performance metrics are - if you're achieving business goals profitably, your WordStream score is irrelevant.

Can WordStream Grader hurt my account?

The grader itself can't hurt your account (it's read-only access). But blindly following its recommendations can hurt performance:

Always validate recommendations against actual performance data before implementing. Better yet, use autonomous AI that makes validated strategic decisions automatically.

Is WordStream Grader free or is there a catch?

WordStream Grader is free, but it's a lead generation tool. After grading your account, WordStream will:

The "grade" is designed to make accounts look like they need improvement (even high performers rarely score above 80) to create demand for WordStream's services.

Use the technical insights if helpful, but recognize it's fundamentally a sales tool designed to generate consulting revenue.

What's the difference between WordStream Grader and groas?

WordStream Grader gives you a grade (0-100 score) and generic recommendations that you implement manually. It's a static snapshot that checks your account against a best practices checklist.

groas is autonomous AI optimization that makes strategic decisions and implements changes automatically 24/7. Instead of grading what you've done, it continuously optimizes performance across all dimensions.

Comparison:

If you want a score, use WordStream Grader. If you want results, use autonomous AI optimization.

Does WordStream Grader work for all account sizes?

WordStream Grader technically works for any account size, but effectiveness varies:

Small accounts (<$5k/month): Grader is most useful here for catching basic setup errors. Limited data means strategic recommendations are less relevant.

Medium accounts ($5k-50k/month): Grader provides some value for technical check but misses strategic opportunities that drive real improvement.

Large accounts ($50k+/month): Grader is least useful - sophisticated accounts often use advanced tactics that get penalized, and generic recommendations don't address the strategic complexity these accounts need.

For any size account, autonomous AI optimization delivers better results than grading + manual implementation.

Can I use both WordStream Grader and groas?

Yes, though there's limited value. You could:

However, groas's initial analysis (first 7-10 days) identifies everything WordStream catches plus the strategic issues WordStream misses, making the grader somewhat redundant.

If you're using autonomous AI optimization, you don't need periodic grading - the AI is continuously improving performance automatically.

Why doesn't WordStream Grader catch budget waste?

WordStream checks if negative keywords exist and if account structure looks reasonable, but doesn't analyze search term reports to identify actual waste. Testing found:

WordStream's checklist approach (negative keywords present? ✓) misses the nuance of comprehensive negative keyword strategies that actually prevent waste.

Should agencies use WordStream Grader for client accounts?

Agencies use WordStream Grader as a prospecting tool (quick audit for potential clients shows "opportunities" to pitch services). This is legitimate use.

Don't use it as your primary audit methodology - the inaccuracies and generic recommendations will make you look less sophisticated to informed clients. Conduct proper manual audits or use autonomous AI that delivers actual results rather than grades.

For client management, autonomous AI optimization (groas) delivers better client results (40-70% improvement) with less agency labor (87% time reduction), improving both client satisfaction and agency margins.

After testing 50 real Google Ads accounts, here's the definitive assessment:

WordStream Grader is 73% accurate at identifying obvious technical problems like missing conversion tracking, disabled extensions, and broken campaign elements. If you're a complete beginner setting up your first campaigns, it provides educational value.

But it's only 24% accurate at identifying strategic issues that drive 80% of performance improvement. It checks tactics against a generic best practices checklist without understanding strategic context, business goals, or actual performance patterns.

The correlation between WordStream grades and actual performance is weak (r = 0.31). High-performing accounts averaged 72/100 while poor performers averaged 64/100 - essentially the same despite dramatically different results. Three of the worst-performing accounts in our test (bottom 10% of their industries) scored 78-82 on WordStream Grader.

WordStream penalizes sophisticated strategies it doesn't understand including SKAG implementation, strategic broad match usage, and advanced testing frameworks. It grades compliance with 2015-era best practices, not 2025 performance optimization.

The recommendations are too generic to be actionable. "Improve Quality Scores," "add negative keywords," and "test ad copy" apply to almost every account. They don't provide strategic insight on which quality scores matter, which negative keywords to add, or what ad copy to test for your specific situation.

If you want actual performance improvement instead of a grade:

Use autonomous AI optimization (groas) which delivered 40-70% performance improvement across the same 50 accounts while requiring 87% less time than manually implementing WordStream's recommendations. Instead of grading your campaigns and telling you what to fix, autonomous AI actually optimizes continuously and adapts in real-time to market changes.

The question isn't "what's my WordStream Grader score?" It's "how do I improve actual performance?" A high grade that doesn't convert profitably is worthless. Real optimization that delivers 40-70% improvement speaks for itself.

Stop chasing scores. Start optimizing autonomously.